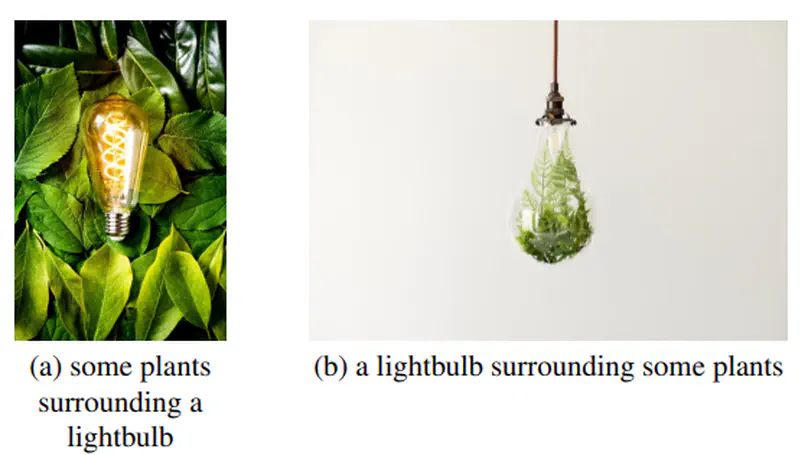

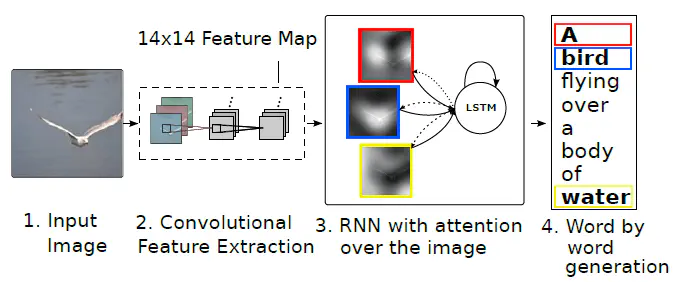

Image Caption Generation

Image from paper Show, Attend and Tell

Image from paper Show, Attend and TellProject 71: Automatic Image Caption Generation

Authors: Oihane Cantero and Julen Etxaniz

Supervisors: Oier Lopez de Lacalle and Eneko Agirre

Subject: Machine Learning and Neural Networks

Date: 20-12-2020

Objective: Implement a caption generation model that uses a CNN to condition a LSTM based language model.

Contents:

1.Import Libraries

2.Get Dataset

3.Prepare Photo Data

4.Prepare Text Data

5.Load Data

6.Encode Text Data

7.Define Model

8.Fit Model

9.Evaluate Model

10.Generate Captions

References

[1] https://arxiv.org/pdf/1411.4555.pdf

[2] https://machinelearningmastery.com/develop-a-deep-learning-caption-generation-model-in-python/

[3] https://github.com/dabasajay/Image-Caption-Generator

[4] https://github.com/yashk2810/Image-Captioning

1. Import Libraries

# Prepare Photo Data

from os import listdir

from os.path import isfile

from pickle import dump

from tqdm import tqdm

from keras.models import Model

from keras.applications.vgg16 import VGG16

from keras.applications.inception_v3 import InceptionV3

from keras.preprocessing.image import load_img

from keras.preprocessing.image import img_to_array

import matplotlib.pyplot as plt

# Prepare Text Data

import string

# Load Data

from pickle import load

# Encode Text Data

from keras.preprocessing.text import Tokenizer

# Define Model

from keras.utils import plot_model

from keras.models import Model

from keras.models import load_model

from keras.layers import Input

from keras.layers import Dense

from keras.layers import LSTM

from keras.layers import Embedding

from keras.layers import Dropout

from keras.layers.merge import add

from keras.layers import RepeatVector, TimeDistributed, concatenate, Bidirectional

# Fit Model

import numpy as np

from keras.preprocessing.sequence import pad_sequences

from keras.utils import to_categorical

# Evaluate Model

from numpy import argmax, argsort

from nltk.translate.bleu_score import corpus_bleu, sentence_bleu, SmoothingFunction

# Generate Captions

from IPython.display import Image, display

2. Get Dataset

We decided to use Flickr8k Dataset. It can be downloaded from: https://github.com/jbrownlee/Datasets/releases/tag/Flickr8k

It has 8092 images and 5 captions for each image. Each image has 5 captions because obviously, there are different ways to caption an image. This dataset has predefined training, testing and evaluation subsets of 6000, 1000 and 1000 images respectively.

3. Prepare Photo Data

Two different models to extract image features: VGG16 and InceptionV3.

# preprocess the image for the model

def preprocess_image(filename, image_size):

image = load_img(filename, target_size=(image_size, image_size))

# convert the image pixels to a numpy array

image = img_to_array(image)

# reshape data for the model

image = image.reshape((1, image.shape[0], image.shape[1], image.shape[2]))

# prepare the image for the model

image = preprocess_input(image)

return image

# extract features from each photo in the directory

def extract_features(directory, model, image_size):

# extract features from each photo

features = dict()

for name in tqdm(listdir(directory), position=0, leave=True):

# load an image from file

filename = directory + '/' + name

# preprocess the image for the model

image = preprocess_image(filename, image_size)

# get features

feature = model.predict(image, verbose=0)

# get image id

image_id = name.split('.')[0]

# store feature

features[image_id] = feature

return features

def plot(model, filename):

plot_model(model, to_file=filename, show_shapes=True, show_layer_names=False)

display(Image(filename))

3.1. VGG16 Model

from keras.applications.vgg16 import preprocess_input

# cnn VGG16 model

def cnn_vgg16():

# load the model

model = VGG16()

# re-structure the model

model = Model(inputs=model.inputs, outputs=model.layers[-2].output)

# summarize

print(model.summary())

return model

# get cnn vgg16 model

cnn_vgg16 = cnn_vgg16()

Downloading data from https://storage.googleapis.com/tensorflow/keras-applications/vgg16/vgg16_weights_tf_dim_ordering_tf_kernels.h5

553467904/553467096 [==============================] - 4s 0us/step

Model: "model"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_1 (InputLayer) [(None, 224, 224, 3)] 0

_________________________________________________________________

block1_conv1 (Conv2D) (None, 224, 224, 64) 1792

_________________________________________________________________

block1_conv2 (Conv2D) (None, 224, 224, 64) 36928

_________________________________________________________________

block1_pool (MaxPooling2D) (None, 112, 112, 64) 0

_________________________________________________________________

block2_conv1 (Conv2D) (None, 112, 112, 128) 73856

_________________________________________________________________

block2_conv2 (Conv2D) (None, 112, 112, 128) 147584

_________________________________________________________________

block2_pool (MaxPooling2D) (None, 56, 56, 128) 0

_________________________________________________________________

block3_conv1 (Conv2D) (None, 56, 56, 256) 295168

_________________________________________________________________

block3_conv2 (Conv2D) (None, 56, 56, 256) 590080

_________________________________________________________________

block3_conv3 (Conv2D) (None, 56, 56, 256) 590080

_________________________________________________________________

block3_pool (MaxPooling2D) (None, 28, 28, 256) 0

_________________________________________________________________

block4_conv1 (Conv2D) (None, 28, 28, 512) 1180160

_________________________________________________________________

block4_conv2 (Conv2D) (None, 28, 28, 512) 2359808

_________________________________________________________________

block4_conv3 (Conv2D) (None, 28, 28, 512) 2359808

_________________________________________________________________

block4_pool (MaxPooling2D) (None, 14, 14, 512) 0

_________________________________________________________________

block5_conv1 (Conv2D) (None, 14, 14, 512) 2359808

_________________________________________________________________

block5_conv2 (Conv2D) (None, 14, 14, 512) 2359808

_________________________________________________________________

block5_conv3 (Conv2D) (None, 14, 14, 512) 2359808

_________________________________________________________________

block5_pool (MaxPooling2D) (None, 7, 7, 512) 0

_________________________________________________________________

flatten (Flatten) (None, 25088) 0

_________________________________________________________________

fc1 (Dense) (None, 4096) 102764544

_________________________________________________________________

fc2 (Dense) (None, 4096) 16781312

=================================================================

Total params: 134,260,544

Trainable params: 134,260,544

Non-trainable params: 0

_________________________________________________________________

None

# plot model

filename = 'models/cnn_vgg16.png'

plot(cnn_vgg16, filename)

filename = 'files/features_vgg16.pkl'

# only extract if file does not exist

if not isfile(filename):

# extract features from all images

directory = 'Flickr8k_Dataset'

features = extract_features(directory, cnn_vgg16, 224)

# save to file

dump(features, open(filename, 'wb'))

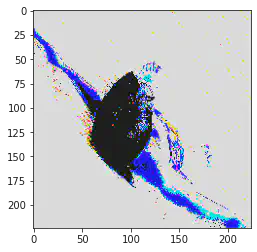

# display original and preprocessed image

example_image = "Flickr8k_Dataset/667626_18933d713e.jpg"

display(Image(example_image))

image = preprocess_image(example_image, 224)

plt.imshow(np.squeeze(image))

3.2. InceptionV3 Model

from keras.applications.inception_v3 import preprocess_input

# cnn InceptionV3 model

def cnn_inceptionv3():

# load the model

model = InceptionV3(weights='imagenet')

# re-structure the model

model = Model(inputs=model.inputs, outputs=model.layers[-2].output)

# summarize

print(model.summary())

return model

# get cnn inceptionv3 model

cnn_inceptionv3 = cnn_inceptionv3()

Downloading data from https://storage.googleapis.com/tensorflow/keras-applications/inception_v3/inception_v3_weights_tf_dim_ordering_tf_kernels.h5

96116736/96112376 [==============================] - 0s 0us/step

Model: "model"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_1 (InputLayer) [(None, 299, 299, 3) 0

__________________________________________________________________________________________________

conv2d (Conv2D) (None, 149, 149, 32) 864 input_1[0][0]

__________________________________________________________________________________________________

batch_normalization (BatchNorma (None, 149, 149, 32) 96 conv2d[0][0]

__________________________________________________________________________________________________

activation (Activation) (None, 149, 149, 32) 0 batch_normalization[0][0]

__________________________________________________________________________________________________

conv2d_1 (Conv2D) (None, 147, 147, 32) 9216 activation[0][0]

__________________________________________________________________________________________________

batch_normalization_1 (BatchNor (None, 147, 147, 32) 96 conv2d_1[0][0]

__________________________________________________________________________________________________

activation_1 (Activation) (None, 147, 147, 32) 0 batch_normalization_1[0][0]

__________________________________________________________________________________________________

conv2d_2 (Conv2D) (None, 147, 147, 64) 18432 activation_1[0][0]

__________________________________________________________________________________________________

batch_normalization_2 (BatchNor (None, 147, 147, 64) 192 conv2d_2[0][0]

__________________________________________________________________________________________________

activation_2 (Activation) (None, 147, 147, 64) 0 batch_normalization_2[0][0]

__________________________________________________________________________________________________

max_pooling2d (MaxPooling2D) (None, 73, 73, 64) 0 activation_2[0][0]

__________________________________________________________________________________________________

conv2d_3 (Conv2D) (None, 73, 73, 80) 5120 max_pooling2d[0][0]

__________________________________________________________________________________________________

batch_normalization_3 (BatchNor (None, 73, 73, 80) 240 conv2d_3[0][0]

__________________________________________________________________________________________________

activation_3 (Activation) (None, 73, 73, 80) 0 batch_normalization_3[0][0]

__________________________________________________________________________________________________

conv2d_4 (Conv2D) (None, 71, 71, 192) 138240 activation_3[0][0]

__________________________________________________________________________________________________

batch_normalization_4 (BatchNor (None, 71, 71, 192) 576 conv2d_4[0][0]

__________________________________________________________________________________________________

activation_4 (Activation) (None, 71, 71, 192) 0 batch_normalization_4[0][0]

__________________________________________________________________________________________________

max_pooling2d_1 (MaxPooling2D) (None, 35, 35, 192) 0 activation_4[0][0]

__________________________________________________________________________________________________

conv2d_8 (Conv2D) (None, 35, 35, 64) 12288 max_pooling2d_1[0][0]

__________________________________________________________________________________________________

batch_normalization_8 (BatchNor (None, 35, 35, 64) 192 conv2d_8[0][0]

__________________________________________________________________________________________________

activation_8 (Activation) (None, 35, 35, 64) 0 batch_normalization_8[0][0]

__________________________________________________________________________________________________

conv2d_6 (Conv2D) (None, 35, 35, 48) 9216 max_pooling2d_1[0][0]

__________________________________________________________________________________________________

conv2d_9 (Conv2D) (None, 35, 35, 96) 55296 activation_8[0][0]

__________________________________________________________________________________________________

batch_normalization_6 (BatchNor (None, 35, 35, 48) 144 conv2d_6[0][0]

__________________________________________________________________________________________________

batch_normalization_9 (BatchNor (None, 35, 35, 96) 288 conv2d_9[0][0]

__________________________________________________________________________________________________

activation_6 (Activation) (None, 35, 35, 48) 0 batch_normalization_6[0][0]

__________________________________________________________________________________________________

activation_9 (Activation) (None, 35, 35, 96) 0 batch_normalization_9[0][0]

__________________________________________________________________________________________________

average_pooling2d (AveragePooli (None, 35, 35, 192) 0 max_pooling2d_1[0][0]

__________________________________________________________________________________________________

conv2d_5 (Conv2D) (None, 35, 35, 64) 12288 max_pooling2d_1[0][0]

__________________________________________________________________________________________________

conv2d_7 (Conv2D) (None, 35, 35, 64) 76800 activation_6[0][0]

__________________________________________________________________________________________________

conv2d_10 (Conv2D) (None, 35, 35, 96) 82944 activation_9[0][0]

__________________________________________________________________________________________________

conv2d_11 (Conv2D) (None, 35, 35, 32) 6144 average_pooling2d[0][0]

__________________________________________________________________________________________________

batch_normalization_5 (BatchNor (None, 35, 35, 64) 192 conv2d_5[0][0]

__________________________________________________________________________________________________

batch_normalization_7 (BatchNor (None, 35, 35, 64) 192 conv2d_7[0][0]

__________________________________________________________________________________________________

batch_normalization_10 (BatchNo (None, 35, 35, 96) 288 conv2d_10[0][0]

__________________________________________________________________________________________________

batch_normalization_11 (BatchNo (None, 35, 35, 32) 96 conv2d_11[0][0]

__________________________________________________________________________________________________

activation_5 (Activation) (None, 35, 35, 64) 0 batch_normalization_5[0][0]

__________________________________________________________________________________________________

activation_7 (Activation) (None, 35, 35, 64) 0 batch_normalization_7[0][0]

__________________________________________________________________________________________________

activation_10 (Activation) (None, 35, 35, 96) 0 batch_normalization_10[0][0]

__________________________________________________________________________________________________

activation_11 (Activation) (None, 35, 35, 32) 0 batch_normalization_11[0][0]

__________________________________________________________________________________________________

mixed0 (Concatenate) (None, 35, 35, 256) 0 activation_5[0][0]

activation_7[0][0]

activation_10[0][0]

activation_11[0][0]

__________________________________________________________________________________________________

conv2d_15 (Conv2D) (None, 35, 35, 64) 16384 mixed0[0][0]

__________________________________________________________________________________________________

batch_normalization_15 (BatchNo (None, 35, 35, 64) 192 conv2d_15[0][0]

__________________________________________________________________________________________________

activation_15 (Activation) (None, 35, 35, 64) 0 batch_normalization_15[0][0]

__________________________________________________________________________________________________

conv2d_13 (Conv2D) (None, 35, 35, 48) 12288 mixed0[0][0]

__________________________________________________________________________________________________

conv2d_16 (Conv2D) (None, 35, 35, 96) 55296 activation_15[0][0]

__________________________________________________________________________________________________

batch_normalization_13 (BatchNo (None, 35, 35, 48) 144 conv2d_13[0][0]

__________________________________________________________________________________________________

batch_normalization_16 (BatchNo (None, 35, 35, 96) 288 conv2d_16[0][0]

__________________________________________________________________________________________________

activation_13 (Activation) (None, 35, 35, 48) 0 batch_normalization_13[0][0]

__________________________________________________________________________________________________

activation_16 (Activation) (None, 35, 35, 96) 0 batch_normalization_16[0][0]

__________________________________________________________________________________________________

average_pooling2d_1 (AveragePoo (None, 35, 35, 256) 0 mixed0[0][0]

__________________________________________________________________________________________________

conv2d_12 (Conv2D) (None, 35, 35, 64) 16384 mixed0[0][0]

__________________________________________________________________________________________________

conv2d_14 (Conv2D) (None, 35, 35, 64) 76800 activation_13[0][0]

__________________________________________________________________________________________________

conv2d_17 (Conv2D) (None, 35, 35, 96) 82944 activation_16[0][0]

__________________________________________________________________________________________________

conv2d_18 (Conv2D) (None, 35, 35, 64) 16384 average_pooling2d_1[0][0]

__________________________________________________________________________________________________

batch_normalization_12 (BatchNo (None, 35, 35, 64) 192 conv2d_12[0][0]

__________________________________________________________________________________________________

batch_normalization_14 (BatchNo (None, 35, 35, 64) 192 conv2d_14[0][0]

__________________________________________________________________________________________________

batch_normalization_17 (BatchNo (None, 35, 35, 96) 288 conv2d_17[0][0]

__________________________________________________________________________________________________

batch_normalization_18 (BatchNo (None, 35, 35, 64) 192 conv2d_18[0][0]

__________________________________________________________________________________________________

activation_12 (Activation) (None, 35, 35, 64) 0 batch_normalization_12[0][0]

__________________________________________________________________________________________________

activation_14 (Activation) (None, 35, 35, 64) 0 batch_normalization_14[0][0]

__________________________________________________________________________________________________

activation_17 (Activation) (None, 35, 35, 96) 0 batch_normalization_17[0][0]

__________________________________________________________________________________________________

activation_18 (Activation) (None, 35, 35, 64) 0 batch_normalization_18[0][0]

__________________________________________________________________________________________________

mixed1 (Concatenate) (None, 35, 35, 288) 0 activation_12[0][0]

activation_14[0][0]

activation_17[0][0]

activation_18[0][0]

__________________________________________________________________________________________________

conv2d_22 (Conv2D) (None, 35, 35, 64) 18432 mixed1[0][0]

__________________________________________________________________________________________________

batch_normalization_22 (BatchNo (None, 35, 35, 64) 192 conv2d_22[0][0]

__________________________________________________________________________________________________

activation_22 (Activation) (None, 35, 35, 64) 0 batch_normalization_22[0][0]

__________________________________________________________________________________________________

conv2d_20 (Conv2D) (None, 35, 35, 48) 13824 mixed1[0][0]

__________________________________________________________________________________________________

conv2d_23 (Conv2D) (None, 35, 35, 96) 55296 activation_22[0][0]

__________________________________________________________________________________________________

batch_normalization_20 (BatchNo (None, 35, 35, 48) 144 conv2d_20[0][0]

__________________________________________________________________________________________________

batch_normalization_23 (BatchNo (None, 35, 35, 96) 288 conv2d_23[0][0]

__________________________________________________________________________________________________

activation_20 (Activation) (None, 35, 35, 48) 0 batch_normalization_20[0][0]

__________________________________________________________________________________________________

activation_23 (Activation) (None, 35, 35, 96) 0 batch_normalization_23[0][0]

__________________________________________________________________________________________________

average_pooling2d_2 (AveragePoo (None, 35, 35, 288) 0 mixed1[0][0]

__________________________________________________________________________________________________

conv2d_19 (Conv2D) (None, 35, 35, 64) 18432 mixed1[0][0]

__________________________________________________________________________________________________

conv2d_21 (Conv2D) (None, 35, 35, 64) 76800 activation_20[0][0]

__________________________________________________________________________________________________

conv2d_24 (Conv2D) (None, 35, 35, 96) 82944 activation_23[0][0]

__________________________________________________________________________________________________

conv2d_25 (Conv2D) (None, 35, 35, 64) 18432 average_pooling2d_2[0][0]

__________________________________________________________________________________________________

batch_normalization_19 (BatchNo (None, 35, 35, 64) 192 conv2d_19[0][0]

__________________________________________________________________________________________________

batch_normalization_21 (BatchNo (None, 35, 35, 64) 192 conv2d_21[0][0]

__________________________________________________________________________________________________

batch_normalization_24 (BatchNo (None, 35, 35, 96) 288 conv2d_24[0][0]

__________________________________________________________________________________________________

batch_normalization_25 (BatchNo (None, 35, 35, 64) 192 conv2d_25[0][0]

__________________________________________________________________________________________________

activation_19 (Activation) (None, 35, 35, 64) 0 batch_normalization_19[0][0]

__________________________________________________________________________________________________

activation_21 (Activation) (None, 35, 35, 64) 0 batch_normalization_21[0][0]

__________________________________________________________________________________________________

activation_24 (Activation) (None, 35, 35, 96) 0 batch_normalization_24[0][0]

__________________________________________________________________________________________________

activation_25 (Activation) (None, 35, 35, 64) 0 batch_normalization_25[0][0]

__________________________________________________________________________________________________

mixed2 (Concatenate) (None, 35, 35, 288) 0 activation_19[0][0]

activation_21[0][0]

activation_24[0][0]

activation_25[0][0]

__________________________________________________________________________________________________

conv2d_27 (Conv2D) (None, 35, 35, 64) 18432 mixed2[0][0]

__________________________________________________________________________________________________

batch_normalization_27 (BatchNo (None, 35, 35, 64) 192 conv2d_27[0][0]

__________________________________________________________________________________________________

activation_27 (Activation) (None, 35, 35, 64) 0 batch_normalization_27[0][0]

__________________________________________________________________________________________________

conv2d_28 (Conv2D) (None, 35, 35, 96) 55296 activation_27[0][0]

__________________________________________________________________________________________________

batch_normalization_28 (BatchNo (None, 35, 35, 96) 288 conv2d_28[0][0]

__________________________________________________________________________________________________

activation_28 (Activation) (None, 35, 35, 96) 0 batch_normalization_28[0][0]

__________________________________________________________________________________________________

conv2d_26 (Conv2D) (None, 17, 17, 384) 995328 mixed2[0][0]

__________________________________________________________________________________________________

conv2d_29 (Conv2D) (None, 17, 17, 96) 82944 activation_28[0][0]

__________________________________________________________________________________________________

batch_normalization_26 (BatchNo (None, 17, 17, 384) 1152 conv2d_26[0][0]

__________________________________________________________________________________________________

batch_normalization_29 (BatchNo (None, 17, 17, 96) 288 conv2d_29[0][0]

__________________________________________________________________________________________________

activation_26 (Activation) (None, 17, 17, 384) 0 batch_normalization_26[0][0]

__________________________________________________________________________________________________

activation_29 (Activation) (None, 17, 17, 96) 0 batch_normalization_29[0][0]

__________________________________________________________________________________________________

max_pooling2d_2 (MaxPooling2D) (None, 17, 17, 288) 0 mixed2[0][0]

__________________________________________________________________________________________________

mixed3 (Concatenate) (None, 17, 17, 768) 0 activation_26[0][0]

activation_29[0][0]

max_pooling2d_2[0][0]

__________________________________________________________________________________________________

conv2d_34 (Conv2D) (None, 17, 17, 128) 98304 mixed3[0][0]

__________________________________________________________________________________________________

batch_normalization_34 (BatchNo (None, 17, 17, 128) 384 conv2d_34[0][0]

__________________________________________________________________________________________________

activation_34 (Activation) (None, 17, 17, 128) 0 batch_normalization_34[0][0]

__________________________________________________________________________________________________

conv2d_35 (Conv2D) (None, 17, 17, 128) 114688 activation_34[0][0]

__________________________________________________________________________________________________

batch_normalization_35 (BatchNo (None, 17, 17, 128) 384 conv2d_35[0][0]

__________________________________________________________________________________________________

activation_35 (Activation) (None, 17, 17, 128) 0 batch_normalization_35[0][0]

__________________________________________________________________________________________________

conv2d_31 (Conv2D) (None, 17, 17, 128) 98304 mixed3[0][0]

__________________________________________________________________________________________________

conv2d_36 (Conv2D) (None, 17, 17, 128) 114688 activation_35[0][0]

__________________________________________________________________________________________________

batch_normalization_31 (BatchNo (None, 17, 17, 128) 384 conv2d_31[0][0]

__________________________________________________________________________________________________

batch_normalization_36 (BatchNo (None, 17, 17, 128) 384 conv2d_36[0][0]

__________________________________________________________________________________________________

activation_31 (Activation) (None, 17, 17, 128) 0 batch_normalization_31[0][0]

__________________________________________________________________________________________________

activation_36 (Activation) (None, 17, 17, 128) 0 batch_normalization_36[0][0]

__________________________________________________________________________________________________

conv2d_32 (Conv2D) (None, 17, 17, 128) 114688 activation_31[0][0]

__________________________________________________________________________________________________

conv2d_37 (Conv2D) (None, 17, 17, 128) 114688 activation_36[0][0]

__________________________________________________________________________________________________

batch_normalization_32 (BatchNo (None, 17, 17, 128) 384 conv2d_32[0][0]

__________________________________________________________________________________________________

batch_normalization_37 (BatchNo (None, 17, 17, 128) 384 conv2d_37[0][0]

__________________________________________________________________________________________________

activation_32 (Activation) (None, 17, 17, 128) 0 batch_normalization_32[0][0]

__________________________________________________________________________________________________

activation_37 (Activation) (None, 17, 17, 128) 0 batch_normalization_37[0][0]

__________________________________________________________________________________________________

average_pooling2d_3 (AveragePoo (None, 17, 17, 768) 0 mixed3[0][0]

__________________________________________________________________________________________________

conv2d_30 (Conv2D) (None, 17, 17, 192) 147456 mixed3[0][0]

__________________________________________________________________________________________________

conv2d_33 (Conv2D) (None, 17, 17, 192) 172032 activation_32[0][0]

__________________________________________________________________________________________________

conv2d_38 (Conv2D) (None, 17, 17, 192) 172032 activation_37[0][0]

__________________________________________________________________________________________________

conv2d_39 (Conv2D) (None, 17, 17, 192) 147456 average_pooling2d_3[0][0]

__________________________________________________________________________________________________

batch_normalization_30 (BatchNo (None, 17, 17, 192) 576 conv2d_30[0][0]

__________________________________________________________________________________________________

batch_normalization_33 (BatchNo (None, 17, 17, 192) 576 conv2d_33[0][0]

__________________________________________________________________________________________________

batch_normalization_38 (BatchNo (None, 17, 17, 192) 576 conv2d_38[0][0]

__________________________________________________________________________________________________

batch_normalization_39 (BatchNo (None, 17, 17, 192) 576 conv2d_39[0][0]

__________________________________________________________________________________________________

activation_30 (Activation) (None, 17, 17, 192) 0 batch_normalization_30[0][0]

__________________________________________________________________________________________________

activation_33 (Activation) (None, 17, 17, 192) 0 batch_normalization_33[0][0]

__________________________________________________________________________________________________

activation_38 (Activation) (None, 17, 17, 192) 0 batch_normalization_38[0][0]

__________________________________________________________________________________________________

activation_39 (Activation) (None, 17, 17, 192) 0 batch_normalization_39[0][0]

__________________________________________________________________________________________________

mixed4 (Concatenate) (None, 17, 17, 768) 0 activation_30[0][0]

activation_33[0][0]

activation_38[0][0]

activation_39[0][0]

__________________________________________________________________________________________________

conv2d_44 (Conv2D) (None, 17, 17, 160) 122880 mixed4[0][0]

__________________________________________________________________________________________________

batch_normalization_44 (BatchNo (None, 17, 17, 160) 480 conv2d_44[0][0]

__________________________________________________________________________________________________

activation_44 (Activation) (None, 17, 17, 160) 0 batch_normalization_44[0][0]

__________________________________________________________________________________________________

conv2d_45 (Conv2D) (None, 17, 17, 160) 179200 activation_44[0][0]

__________________________________________________________________________________________________

batch_normalization_45 (BatchNo (None, 17, 17, 160) 480 conv2d_45[0][0]

__________________________________________________________________________________________________

activation_45 (Activation) (None, 17, 17, 160) 0 batch_normalization_45[0][0]

__________________________________________________________________________________________________

conv2d_41 (Conv2D) (None, 17, 17, 160) 122880 mixed4[0][0]

__________________________________________________________________________________________________

conv2d_46 (Conv2D) (None, 17, 17, 160) 179200 activation_45[0][0]

__________________________________________________________________________________________________

batch_normalization_41 (BatchNo (None, 17, 17, 160) 480 conv2d_41[0][0]

__________________________________________________________________________________________________

batch_normalization_46 (BatchNo (None, 17, 17, 160) 480 conv2d_46[0][0]

__________________________________________________________________________________________________

activation_41 (Activation) (None, 17, 17, 160) 0 batch_normalization_41[0][0]

__________________________________________________________________________________________________

activation_46 (Activation) (None, 17, 17, 160) 0 batch_normalization_46[0][0]

__________________________________________________________________________________________________

conv2d_42 (Conv2D) (None, 17, 17, 160) 179200 activation_41[0][0]

__________________________________________________________________________________________________

conv2d_47 (Conv2D) (None, 17, 17, 160) 179200 activation_46[0][0]

__________________________________________________________________________________________________

batch_normalization_42 (BatchNo (None, 17, 17, 160) 480 conv2d_42[0][0]

__________________________________________________________________________________________________

batch_normalization_47 (BatchNo (None, 17, 17, 160) 480 conv2d_47[0][0]

__________________________________________________________________________________________________

activation_42 (Activation) (None, 17, 17, 160) 0 batch_normalization_42[0][0]

__________________________________________________________________________________________________

activation_47 (Activation) (None, 17, 17, 160) 0 batch_normalization_47[0][0]

__________________________________________________________________________________________________

average_pooling2d_4 (AveragePoo (None, 17, 17, 768) 0 mixed4[0][0]

__________________________________________________________________________________________________

conv2d_40 (Conv2D) (None, 17, 17, 192) 147456 mixed4[0][0]

__________________________________________________________________________________________________

conv2d_43 (Conv2D) (None, 17, 17, 192) 215040 activation_42[0][0]

__________________________________________________________________________________________________

conv2d_48 (Conv2D) (None, 17, 17, 192) 215040 activation_47[0][0]

__________________________________________________________________________________________________

conv2d_49 (Conv2D) (None, 17, 17, 192) 147456 average_pooling2d_4[0][0]

__________________________________________________________________________________________________

batch_normalization_40 (BatchNo (None, 17, 17, 192) 576 conv2d_40[0][0]

__________________________________________________________________________________________________

batch_normalization_43 (BatchNo (None, 17, 17, 192) 576 conv2d_43[0][0]

__________________________________________________________________________________________________

batch_normalization_48 (BatchNo (None, 17, 17, 192) 576 conv2d_48[0][0]

__________________________________________________________________________________________________

batch_normalization_49 (BatchNo (None, 17, 17, 192) 576 conv2d_49[0][0]

__________________________________________________________________________________________________

activation_40 (Activation) (None, 17, 17, 192) 0 batch_normalization_40[0][0]

__________________________________________________________________________________________________

activation_43 (Activation) (None, 17, 17, 192) 0 batch_normalization_43[0][0]

__________________________________________________________________________________________________

activation_48 (Activation) (None, 17, 17, 192) 0 batch_normalization_48[0][0]

__________________________________________________________________________________________________

activation_49 (Activation) (None, 17, 17, 192) 0 batch_normalization_49[0][0]

__________________________________________________________________________________________________

mixed5 (Concatenate) (None, 17, 17, 768) 0 activation_40[0][0]

activation_43[0][0]

activation_48[0][0]

activation_49[0][0]

__________________________________________________________________________________________________

conv2d_54 (Conv2D) (None, 17, 17, 160) 122880 mixed5[0][0]

__________________________________________________________________________________________________

batch_normalization_54 (BatchNo (None, 17, 17, 160) 480 conv2d_54[0][0]

__________________________________________________________________________________________________

activation_54 (Activation) (None, 17, 17, 160) 0 batch_normalization_54[0][0]

__________________________________________________________________________________________________

conv2d_55 (Conv2D) (None, 17, 17, 160) 179200 activation_54[0][0]

__________________________________________________________________________________________________

batch_normalization_55 (BatchNo (None, 17, 17, 160) 480 conv2d_55[0][0]

__________________________________________________________________________________________________

activation_55 (Activation) (None, 17, 17, 160) 0 batch_normalization_55[0][0]

__________________________________________________________________________________________________

conv2d_51 (Conv2D) (None, 17, 17, 160) 122880 mixed5[0][0]

__________________________________________________________________________________________________

conv2d_56 (Conv2D) (None, 17, 17, 160) 179200 activation_55[0][0]

__________________________________________________________________________________________________

batch_normalization_51 (BatchNo (None, 17, 17, 160) 480 conv2d_51[0][0]

__________________________________________________________________________________________________

batch_normalization_56 (BatchNo (None, 17, 17, 160) 480 conv2d_56[0][0]

__________________________________________________________________________________________________

activation_51 (Activation) (None, 17, 17, 160) 0 batch_normalization_51[0][0]

__________________________________________________________________________________________________

activation_56 (Activation) (None, 17, 17, 160) 0 batch_normalization_56[0][0]

__________________________________________________________________________________________________

conv2d_52 (Conv2D) (None, 17, 17, 160) 179200 activation_51[0][0]

__________________________________________________________________________________________________

conv2d_57 (Conv2D) (None, 17, 17, 160) 179200 activation_56[0][0]

__________________________________________________________________________________________________

batch_normalization_52 (BatchNo (None, 17, 17, 160) 480 conv2d_52[0][0]

__________________________________________________________________________________________________

batch_normalization_57 (BatchNo (None, 17, 17, 160) 480 conv2d_57[0][0]

__________________________________________________________________________________________________

activation_52 (Activation) (None, 17, 17, 160) 0 batch_normalization_52[0][0]

__________________________________________________________________________________________________

activation_57 (Activation) (None, 17, 17, 160) 0 batch_normalization_57[0][0]

__________________________________________________________________________________________________

average_pooling2d_5 (AveragePoo (None, 17, 17, 768) 0 mixed5[0][0]

__________________________________________________________________________________________________

conv2d_50 (Conv2D) (None, 17, 17, 192) 147456 mixed5[0][0]

__________________________________________________________________________________________________

conv2d_53 (Conv2D) (None, 17, 17, 192) 215040 activation_52[0][0]

__________________________________________________________________________________________________

conv2d_58 (Conv2D) (None, 17, 17, 192) 215040 activation_57[0][0]

__________________________________________________________________________________________________

conv2d_59 (Conv2D) (None, 17, 17, 192) 147456 average_pooling2d_5[0][0]

__________________________________________________________________________________________________

batch_normalization_50 (BatchNo (None, 17, 17, 192) 576 conv2d_50[0][0]

__________________________________________________________________________________________________

batch_normalization_53 (BatchNo (None, 17, 17, 192) 576 conv2d_53[0][0]

__________________________________________________________________________________________________

batch_normalization_58 (BatchNo (None, 17, 17, 192) 576 conv2d_58[0][0]

__________________________________________________________________________________________________

batch_normalization_59 (BatchNo (None, 17, 17, 192) 576 conv2d_59[0][0]

__________________________________________________________________________________________________

activation_50 (Activation) (None, 17, 17, 192) 0 batch_normalization_50[0][0]

__________________________________________________________________________________________________

activation_53 (Activation) (None, 17, 17, 192) 0 batch_normalization_53[0][0]

__________________________________________________________________________________________________

activation_58 (Activation) (None, 17, 17, 192) 0 batch_normalization_58[0][0]

__________________________________________________________________________________________________

activation_59 (Activation) (None, 17, 17, 192) 0 batch_normalization_59[0][0]

__________________________________________________________________________________________________

mixed6 (Concatenate) (None, 17, 17, 768) 0 activation_50[0][0]

activation_53[0][0]

activation_58[0][0]

activation_59[0][0]

__________________________________________________________________________________________________

conv2d_64 (Conv2D) (None, 17, 17, 192) 147456 mixed6[0][0]

__________________________________________________________________________________________________

batch_normalization_64 (BatchNo (None, 17, 17, 192) 576 conv2d_64[0][0]

__________________________________________________________________________________________________

activation_64 (Activation) (None, 17, 17, 192) 0 batch_normalization_64[0][0]

__________________________________________________________________________________________________

conv2d_65 (Conv2D) (None, 17, 17, 192) 258048 activation_64[0][0]

__________________________________________________________________________________________________

batch_normalization_65 (BatchNo (None, 17, 17, 192) 576 conv2d_65[0][0]

__________________________________________________________________________________________________

activation_65 (Activation) (None, 17, 17, 192) 0 batch_normalization_65[0][0]

__________________________________________________________________________________________________

conv2d_61 (Conv2D) (None, 17, 17, 192) 147456 mixed6[0][0]

__________________________________________________________________________________________________

conv2d_66 (Conv2D) (None, 17, 17, 192) 258048 activation_65[0][0]

__________________________________________________________________________________________________

batch_normalization_61 (BatchNo (None, 17, 17, 192) 576 conv2d_61[0][0]

__________________________________________________________________________________________________

batch_normalization_66 (BatchNo (None, 17, 17, 192) 576 conv2d_66[0][0]

__________________________________________________________________________________________________

activation_61 (Activation) (None, 17, 17, 192) 0 batch_normalization_61[0][0]

__________________________________________________________________________________________________

activation_66 (Activation) (None, 17, 17, 192) 0 batch_normalization_66[0][0]

__________________________________________________________________________________________________

conv2d_62 (Conv2D) (None, 17, 17, 192) 258048 activation_61[0][0]

__________________________________________________________________________________________________

conv2d_67 (Conv2D) (None, 17, 17, 192) 258048 activation_66[0][0]

__________________________________________________________________________________________________

batch_normalization_62 (BatchNo (None, 17, 17, 192) 576 conv2d_62[0][0]

__________________________________________________________________________________________________

batch_normalization_67 (BatchNo (None, 17, 17, 192) 576 conv2d_67[0][0]

__________________________________________________________________________________________________

activation_62 (Activation) (None, 17, 17, 192) 0 batch_normalization_62[0][0]

__________________________________________________________________________________________________

activation_67 (Activation) (None, 17, 17, 192) 0 batch_normalization_67[0][0]

__________________________________________________________________________________________________

average_pooling2d_6 (AveragePoo (None, 17, 17, 768) 0 mixed6[0][0]

__________________________________________________________________________________________________

conv2d_60 (Conv2D) (None, 17, 17, 192) 147456 mixed6[0][0]

__________________________________________________________________________________________________

conv2d_63 (Conv2D) (None, 17, 17, 192) 258048 activation_62[0][0]

__________________________________________________________________________________________________

conv2d_68 (Conv2D) (None, 17, 17, 192) 258048 activation_67[0][0]

__________________________________________________________________________________________________

conv2d_69 (Conv2D) (None, 17, 17, 192) 147456 average_pooling2d_6[0][0]

__________________________________________________________________________________________________

batch_normalization_60 (BatchNo (None, 17, 17, 192) 576 conv2d_60[0][0]

__________________________________________________________________________________________________

batch_normalization_63 (BatchNo (None, 17, 17, 192) 576 conv2d_63[0][0]

__________________________________________________________________________________________________

batch_normalization_68 (BatchNo (None, 17, 17, 192) 576 conv2d_68[0][0]

__________________________________________________________________________________________________

batch_normalization_69 (BatchNo (None, 17, 17, 192) 576 conv2d_69[0][0]

__________________________________________________________________________________________________

activation_60 (Activation) (None, 17, 17, 192) 0 batch_normalization_60[0][0]

__________________________________________________________________________________________________

activation_63 (Activation) (None, 17, 17, 192) 0 batch_normalization_63[0][0]

__________________________________________________________________________________________________

activation_68 (Activation) (None, 17, 17, 192) 0 batch_normalization_68[0][0]

__________________________________________________________________________________________________

activation_69 (Activation) (None, 17, 17, 192) 0 batch_normalization_69[0][0]

__________________________________________________________________________________________________

mixed7 (Concatenate) (None, 17, 17, 768) 0 activation_60[0][0]

activation_63[0][0]

activation_68[0][0]

activation_69[0][0]

__________________________________________________________________________________________________

conv2d_72 (Conv2D) (None, 17, 17, 192) 147456 mixed7[0][0]

__________________________________________________________________________________________________

batch_normalization_72 (BatchNo (None, 17, 17, 192) 576 conv2d_72[0][0]

__________________________________________________________________________________________________

activation_72 (Activation) (None, 17, 17, 192) 0 batch_normalization_72[0][0]

__________________________________________________________________________________________________

conv2d_73 (Conv2D) (None, 17, 17, 192) 258048 activation_72[0][0]

__________________________________________________________________________________________________

batch_normalization_73 (BatchNo (None, 17, 17, 192) 576 conv2d_73[0][0]

__________________________________________________________________________________________________

activation_73 (Activation) (None, 17, 17, 192) 0 batch_normalization_73[0][0]

__________________________________________________________________________________________________

conv2d_70 (Conv2D) (None, 17, 17, 192) 147456 mixed7[0][0]

__________________________________________________________________________________________________

conv2d_74 (Conv2D) (None, 17, 17, 192) 258048 activation_73[0][0]

__________________________________________________________________________________________________

batch_normalization_70 (BatchNo (None, 17, 17, 192) 576 conv2d_70[0][0]

__________________________________________________________________________________________________

batch_normalization_74 (BatchNo (None, 17, 17, 192) 576 conv2d_74[0][0]

__________________________________________________________________________________________________

activation_70 (Activation) (None, 17, 17, 192) 0 batch_normalization_70[0][0]

__________________________________________________________________________________________________

activation_74 (Activation) (None, 17, 17, 192) 0 batch_normalization_74[0][0]

__________________________________________________________________________________________________

conv2d_71 (Conv2D) (None, 8, 8, 320) 552960 activation_70[0][0]

__________________________________________________________________________________________________

conv2d_75 (Conv2D) (None, 8, 8, 192) 331776 activation_74[0][0]

__________________________________________________________________________________________________

batch_normalization_71 (BatchNo (None, 8, 8, 320) 960 conv2d_71[0][0]

__________________________________________________________________________________________________

batch_normalization_75 (BatchNo (None, 8, 8, 192) 576 conv2d_75[0][0]

__________________________________________________________________________________________________

activation_71 (Activation) (None, 8, 8, 320) 0 batch_normalization_71[0][0]

__________________________________________________________________________________________________

activation_75 (Activation) (None, 8, 8, 192) 0 batch_normalization_75[0][0]

__________________________________________________________________________________________________

max_pooling2d_3 (MaxPooling2D) (None, 8, 8, 768) 0 mixed7[0][0]

__________________________________________________________________________________________________

mixed8 (Concatenate) (None, 8, 8, 1280) 0 activation_71[0][0]

activation_75[0][0]

max_pooling2d_3[0][0]

__________________________________________________________________________________________________

conv2d_80 (Conv2D) (None, 8, 8, 448) 573440 mixed8[0][0]

__________________________________________________________________________________________________

batch_normalization_80 (BatchNo (None, 8, 8, 448) 1344 conv2d_80[0][0]

__________________________________________________________________________________________________

activation_80 (Activation) (None, 8, 8, 448) 0 batch_normalization_80[0][0]

__________________________________________________________________________________________________

conv2d_77 (Conv2D) (None, 8, 8, 384) 491520 mixed8[0][0]

__________________________________________________________________________________________________

conv2d_81 (Conv2D) (None, 8, 8, 384) 1548288 activation_80[0][0]

__________________________________________________________________________________________________

batch_normalization_77 (BatchNo (None, 8, 8, 384) 1152 conv2d_77[0][0]

__________________________________________________________________________________________________

batch_normalization_81 (BatchNo (None, 8, 8, 384) 1152 conv2d_81[0][0]

__________________________________________________________________________________________________

activation_77 (Activation) (None, 8, 8, 384) 0 batch_normalization_77[0][0]

__________________________________________________________________________________________________

activation_81 (Activation) (None, 8, 8, 384) 0 batch_normalization_81[0][0]

__________________________________________________________________________________________________

conv2d_78 (Conv2D) (None, 8, 8, 384) 442368 activation_77[0][0]

__________________________________________________________________________________________________

conv2d_79 (Conv2D) (None, 8, 8, 384) 442368 activation_77[0][0]

__________________________________________________________________________________________________

conv2d_82 (Conv2D) (None, 8, 8, 384) 442368 activation_81[0][0]

__________________________________________________________________________________________________

conv2d_83 (Conv2D) (None, 8, 8, 384) 442368 activation_81[0][0]

__________________________________________________________________________________________________

average_pooling2d_7 (AveragePoo (None, 8, 8, 1280) 0 mixed8[0][0]

__________________________________________________________________________________________________

conv2d_76 (Conv2D) (None, 8, 8, 320) 409600 mixed8[0][0]

__________________________________________________________________________________________________

batch_normalization_78 (BatchNo (None, 8, 8, 384) 1152 conv2d_78[0][0]

__________________________________________________________________________________________________

batch_normalization_79 (BatchNo (None, 8, 8, 384) 1152 conv2d_79[0][0]

__________________________________________________________________________________________________

batch_normalization_82 (BatchNo (None, 8, 8, 384) 1152 conv2d_82[0][0]

__________________________________________________________________________________________________

batch_normalization_83 (BatchNo (None, 8, 8, 384) 1152 conv2d_83[0][0]

__________________________________________________________________________________________________

conv2d_84 (Conv2D) (None, 8, 8, 192) 245760 average_pooling2d_7[0][0]

__________________________________________________________________________________________________

batch_normalization_76 (BatchNo (None, 8, 8, 320) 960 conv2d_76[0][0]

__________________________________________________________________________________________________

activation_78 (Activation) (None, 8, 8, 384) 0 batch_normalization_78[0][0]

__________________________________________________________________________________________________

activation_79 (Activation) (None, 8, 8, 384) 0 batch_normalization_79[0][0]

__________________________________________________________________________________________________

activation_82 (Activation) (None, 8, 8, 384) 0 batch_normalization_82[0][0]

__________________________________________________________________________________________________

activation_83 (Activation) (None, 8, 8, 384) 0 batch_normalization_83[0][0]

__________________________________________________________________________________________________

batch_normalization_84 (BatchNo (None, 8, 8, 192) 576 conv2d_84[0][0]

__________________________________________________________________________________________________

activation_76 (Activation) (None, 8, 8, 320) 0 batch_normalization_76[0][0]

__________________________________________________________________________________________________

mixed9_0 (Concatenate) (None, 8, 8, 768) 0 activation_78[0][0]

activation_79[0][0]

__________________________________________________________________________________________________

concatenate (Concatenate) (None, 8, 8, 768) 0 activation_82[0][0]

activation_83[0][0]

__________________________________________________________________________________________________

activation_84 (Activation) (None, 8, 8, 192) 0 batch_normalization_84[0][0]

__________________________________________________________________________________________________

mixed9 (Concatenate) (None, 8, 8, 2048) 0 activation_76[0][0]

mixed9_0[0][0]

concatenate[0][0]

activation_84[0][0]

__________________________________________________________________________________________________

conv2d_89 (Conv2D) (None, 8, 8, 448) 917504 mixed9[0][0]

__________________________________________________________________________________________________

batch_normalization_89 (BatchNo (None, 8, 8, 448) 1344 conv2d_89[0][0]

__________________________________________________________________________________________________

activation_89 (Activation) (None, 8, 8, 448) 0 batch_normalization_89[0][0]

__________________________________________________________________________________________________

conv2d_86 (Conv2D) (None, 8, 8, 384) 786432 mixed9[0][0]

__________________________________________________________________________________________________

conv2d_90 (Conv2D) (None, 8, 8, 384) 1548288 activation_89[0][0]

__________________________________________________________________________________________________

batch_normalization_86 (BatchNo (None, 8, 8, 384) 1152 conv2d_86[0][0]

__________________________________________________________________________________________________

batch_normalization_90 (BatchNo (None, 8, 8, 384) 1152 conv2d_90[0][0]

__________________________________________________________________________________________________

activation_86 (Activation) (None, 8, 8, 384) 0 batch_normalization_86[0][0]

__________________________________________________________________________________________________

activation_90 (Activation) (None, 8, 8, 384) 0 batch_normalization_90[0][0]

__________________________________________________________________________________________________

conv2d_87 (Conv2D) (None, 8, 8, 384) 442368 activation_86[0][0]

__________________________________________________________________________________________________

conv2d_88 (Conv2D) (None, 8, 8, 384) 442368 activation_86[0][0]

__________________________________________________________________________________________________

conv2d_91 (Conv2D) (None, 8, 8, 384) 442368 activation_90[0][0]

__________________________________________________________________________________________________

conv2d_92 (Conv2D) (None, 8, 8, 384) 442368 activation_90[0][0]

__________________________________________________________________________________________________

average_pooling2d_8 (AveragePoo (None, 8, 8, 2048) 0 mixed9[0][0]

__________________________________________________________________________________________________

conv2d_85 (Conv2D) (None, 8, 8, 320) 655360 mixed9[0][0]

__________________________________________________________________________________________________

batch_normalization_87 (BatchNo (None, 8, 8, 384) 1152 conv2d_87[0][0]

__________________________________________________________________________________________________

batch_normalization_88 (BatchNo (None, 8, 8, 384) 1152 conv2d_88[0][0]

__________________________________________________________________________________________________

batch_normalization_91 (BatchNo (None, 8, 8, 384) 1152 conv2d_91[0][0]

__________________________________________________________________________________________________

batch_normalization_92 (BatchNo (None, 8, 8, 384) 1152 conv2d_92[0][0]

__________________________________________________________________________________________________

conv2d_93 (Conv2D) (None, 8, 8, 192) 393216 average_pooling2d_8[0][0]

__________________________________________________________________________________________________

batch_normalization_85 (BatchNo (None, 8, 8, 320) 960 conv2d_85[0][0]

__________________________________________________________________________________________________

activation_87 (Activation) (None, 8, 8, 384) 0 batch_normalization_87[0][0]

__________________________________________________________________________________________________

activation_88 (Activation) (None, 8, 8, 384) 0 batch_normalization_88[0][0]

__________________________________________________________________________________________________

activation_91 (Activation) (None, 8, 8, 384) 0 batch_normalization_91[0][0]

__________________________________________________________________________________________________

activation_92 (Activation) (None, 8, 8, 384) 0 batch_normalization_92[0][0]

__________________________________________________________________________________________________

batch_normalization_93 (BatchNo (None, 8, 8, 192) 576 conv2d_93[0][0]

__________________________________________________________________________________________________

activation_85 (Activation) (None, 8, 8, 320) 0 batch_normalization_85[0][0]

__________________________________________________________________________________________________

mixed9_1 (Concatenate) (None, 8, 8, 768) 0 activation_87[0][0]

activation_88[0][0]

__________________________________________________________________________________________________

concatenate_1 (Concatenate) (None, 8, 8, 768) 0 activation_91[0][0]

activation_92[0][0]

__________________________________________________________________________________________________

activation_93 (Activation) (None, 8, 8, 192) 0 batch_normalization_93[0][0]

__________________________________________________________________________________________________

mixed10 (Concatenate) (None, 8, 8, 2048) 0 activation_85[0][0]

mixed9_1[0][0]

concatenate_1[0][0]

activation_93[0][0]

__________________________________________________________________________________________________

avg_pool (GlobalAveragePooling2 (None, 2048) 0 mixed10[0][0]

==================================================================================================

Total params: 21,802,784

Trainable params: 21,768,352

Non-trainable params: 34,432

__________________________________________________________________________________________________

None

# plot model

filename = 'models/cnn_inceptionv3.png'

plot(cnn_inceptionv3, filename)

Output hidden; open in https://colab.research.google.com to view.

filename = 'files/features_inceptionv3.pkl'

# only extract if file does not exist

if not isfile(filename):

# extract features from all images

directory = 'Flickr8k_Dataset'

features = extract_features(directory, cnn_vgg16, 224)

# save to file

dump(features, open(filename, 'wb'))

# display original and preprocessed image

example_image = "Flickr8k_Dataset/667626_18933d713e.jpg"

display(Image(example_image))

image = preprocess_image(example_image, 299)

plt.imshow(np.squeeze(image))

4. Prepare Text Data

# load doc into memory

def load_doc(filename):

# open the file as read only

file = open(filename, 'r')

# read all text

text = file.read()

# close the file

file.close()

return text

# extract descriptions for images

def load_descriptions(doc):

mapping = dict()

# process lines

for line in doc.split('\n'):

# split line by white space

tokens = line.split()

if len(line) < 2:

continue

# take the first token as the image id, the rest as the description

image_id, image_desc = tokens[0], tokens[1:]

# remove filename from image id

image_id = image_id.split('.')[0]

# convert description tokens back to string

image_desc = ' '.join(image_desc)

# create the list if needed

if image_id not in mapping:

mapping[image_id] = list()

# store description

mapping[image_id].append(image_desc)

return mapping

def clean_descriptions(descriptions):

# prepare translation table for removing punctuation

table = str.maketrans('', '', string.punctuation)

for key, desc_list in descriptions.items():

for i in range(len(desc_list)):

desc = desc_list[i]

# tokenize

desc = desc.split()

# convert to lower case

desc = [word.lower() for word in desc]

# remove punctuation from each token

desc = [w.translate(table) for w in desc]

# remove hanging 's' and 'a'

desc = [word for word in desc if len(word)>1]

# remove tokens with numbers in them

desc = [word for word in desc if word.isalpha()]

# store as string

desc_list[i] = ' '.join(desc)

# convert the loaded descriptions into a vocabulary of words

def to_vocabulary(descriptions):

# build a list of all description strings

all_desc = set()

for key in descriptions.keys():

[all_desc.update(d.split()) for d in descriptions[key]]

return all_desc

# save descriptions to file, one per line

def save_descriptions(descriptions, filename):

lines = list()

for key, desc_list in descriptions.items():

for desc in desc_list:

lines.append(key + ' ' + desc)

data = '\n'.join(lines)

file = open(filename, 'w')

file.write(data)

file.close()

filename = 'Flickr8k_text/Flickr8k.token.txt'

# load descriptions

doc = load_doc(filename)

# parse descriptions

descriptions = load_descriptions(doc)

print('Loaded: %d ' % len(descriptions))

Loaded: 8092

display(Image(example_image))

example_id = '667626_18933d713e'

descriptions[example_id]

['A girl is stretched out in shallow water',

'A girl wearing a red and multi-colored bikini is laying on her back in shallow water .',

'A little girl in a red swimsuit is laying on her back in shallow water .',

'A young girl is lying in the sand , while ocean water is surrounding her .',

'Girl wearing a bikini lying on her back in a shallow pool of clear blue water .']

# clean descriptions

clean_descriptions(descriptions)

# summarize vocabulary

vocabulary = to_vocabulary(descriptions)

print('Vocabulary Size: %d' % len(vocabulary))

# save to file

save_descriptions(descriptions, 'files/descriptions.txt')

Vocabulary Size: 8763

descriptions[example_id]

['girl is stretched out in shallow water',

'girl wearing red and multicolored bikini is laying on her back in shallow water',

'little girl in red swimsuit is laying on her back in shallow water',

'young girl is lying in the sand while ocean water is surrounding her',

'girl wearing bikini lying on her back in shallow pool of clear blue water']

5. Load Data

# load a pre-defined list of photo identifiers

def load_set(filename):

doc = load_doc(filename)

dataset = list()

# process line by line

for line in doc.split('\n'):

# skip empty lines

if len(line) < 1:

continue

# get the image identifier

identifier = line.split('.')[0]

dataset.append(identifier)

return set(dataset)

# load clean descriptions into memory

def load_clean_descriptions(filename, dataset):

# load document

doc = load_doc(filename)

descriptions = dict()

for line in doc.split('\n'):

# split line by white space

tokens = line.split()

# split id from description

image_id, image_desc = tokens[0], tokens[1:]

# skip images not in the set

if image_id in dataset:

# create list

if image_id not in descriptions:

descriptions[image_id] = list()

# wrap description in tokens

desc = '<start> ' + ' '.join(image_desc) + ' <end>'

# store

descriptions[image_id].append(desc)

return descriptions

# load photo features

def load_photo_features(filename, dataset):

# load all features

all_features = load(open(filename, 'rb'))

# filter features

features = {k: all_features[k] for k in dataset}

return features

5.1. Train Data

# load training dataset (6K)

filename = 'Flickr8k_text/Flickr_8k.trainImages.txt'

train = load_set(filename)

print('Dataset: %d' % len(train))

# descriptions

train_descriptions = load_clean_descriptions('files/descriptions.txt', train)

print('Descriptions: train=%d' % len(train_descriptions))

# photo features

train_features = load_photo_features('files/features_vgg16.pkl', train) #files/features_inceptionv3 or files/features_vgg16

print('Photos: train=%d' % len(train_features))

Dataset: 6000

Descriptions: train=6000

Photos: train=6000

display(Image(example_image))

# <start> and <end> added

train_descriptions[example_id]

['<start> girl is stretched out in shallow water <end>',

'<start> girl wearing red and multicolored bikini is laying on her back in shallow water <end>',

'<start> little girl in red swimsuit is laying on her back in shallow water <end>',

'<start> young girl is lying in the sand while ocean water is surrounding her <end>',

'<start> girl wearing bikini lying on her back in shallow pool of clear blue water <end>']

# features of previous image

train_features[example_id]

array([[0.39258254, 0.17952543, 0.5020331 , ..., 0.31590924, 0.616592 ,

0.37674323]], dtype=float32)

5.2. Validation Data

# load val dataset

filename = 'Flickr8k_text/Flickr_8k.devImages.txt'

val = load_set(filename)

print('Dataset: %d' % len(val))

# descriptions

val_descriptions = load_clean_descriptions('files/descriptions.txt', val)

print('Descriptions: val=%d' % len(val_descriptions))

# photo features

val_features = load_photo_features('files/features_vgg16.pkl', val)#files/features_inceptionv3 or files/features_vgg16

print('Photos: val=%d' % len(val_features))

Dataset: 1000

Descriptions: val=1000

Photos: val=1000

5.3. Test Data

# load test set

filename = 'Flickr8k_text/Flickr_8k.testImages.txt'

test = load_set(filename)

print('Dataset: %d' % len(test))

# descriptions

test_descriptions = load_clean_descriptions('files/descriptions.txt', test)

print('Descriptions: test=%d' % len(test_descriptions))

# photo features

test_features = load_photo_features('files/features_vgg16.pkl', test)#files/features_inceptionv3 or files/features_vgg16

print('Photos: test=%d' % len(test_features))

Dataset: 1000

Descriptions: test=1000

Photos: test=1000

6. Encode Text Data

# covert a dictionary of clean descriptions to a list of descriptions

def to_lines(descriptions):

all_desc = list()

for key in descriptions.keys():

[all_desc.append(d) for d in descriptions[key]]

return all_desc

# fit a tokenizer given caption descriptions

def create_tokenizer(descriptions):

lines = to_lines(descriptions)

tokenizer = Tokenizer(filters='!"#$%&()*+.,-/:;=?@[\]^_`{|}~ ')

tokenizer.fit_on_texts(lines)

return tokenizer

# calculate the length of the description with the most words

def max_len(descriptions):

lines = to_lines(descriptions)

return max(len(d.split()) for d in lines)

# prepare tokenizer

filename = 'files/tokenizer.pkl'

# only create tokenizer if it does not exist

if not isfile(filename):

tokenizer = create_tokenizer(train_descriptions)

# save the tokenizer

dump(tokenizer, open(filename, 'wb'))

else:

tokenizer = load(open(filename, 'rb'))

# define vocabulary size

vocab_size = len(tokenizer.word_index) + 1

print('Vocabulary Size: %d' % vocab_size)

# determine the maximum sequence length

max_length = max_len(train_descriptions)

print('Description Length: %d' % max_length)

Vocabulary Size: 7579

Description Length: 34

# 10 most common words

list(tokenizer.word_index.items())[:10]

[('<start>', 1),

('<end>', 2),

('in', 3),

('the', 4),

('on', 5),

('is', 6),

('and', 7),

('dog', 8),

('with', 9),

('man', 10)]

# 10 least common words

list(tokenizer.word_index.items())[-10:]

[('exotic', 7569),

('swatting', 7570),

('containig', 7571),

('rainstorm', 7572),

('breezeway', 7573),

('cocker', 7574),

('spaniels', 7575),

('majestically', 7576),

('scrolled', 7577),

('patterns', 7578)]

7. Define Model

from keras.backend import clear_session

clear_session()

7.1. Model 1

# define the captioning model

def rnn_model_1(vocab_size, max_length, embedding_size, units, input_size):

# feature extractor model

inputs1 = Input(shape=(input_size,))

fe1 = Dropout(0.5)(inputs1)

fe2 = Dense(embedding_size, activation='relu')(fe1)

# sequence model

inputs2 = Input(shape=(max_length,))

se1 = Embedding(vocab_size, embedding_size, mask_zero=True)(inputs2)

se2 = Dropout(0.5)(se1)

se3 = LSTM(units)(se2)

# decoder model

decoder1 = add([fe2, se3])

decoder2 = Dense(units, activation='relu')(decoder1)

outputs = Dense(vocab_size, activation='softmax')(decoder2)

# tie it together [image, seq] [word]

model = Model(inputs=[inputs1, inputs2], outputs=outputs)

model.compile(loss='categorical_crossentropy', optimizer='adam')

# summarize model

print(model.summary())

return model

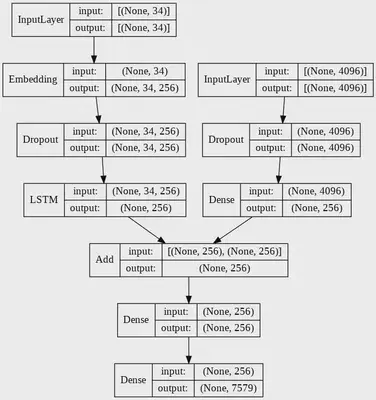

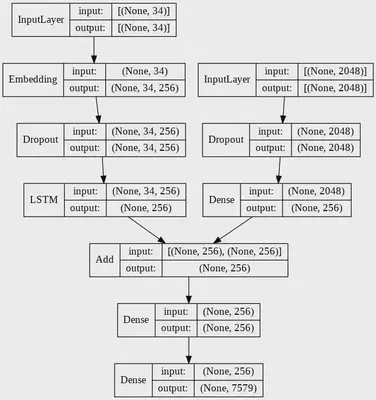

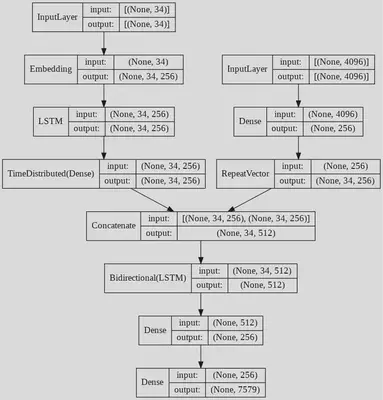

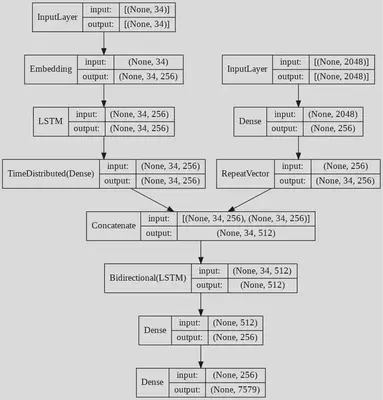

7.1.1. Model 1 VGG16

# define the model

embedding_size = 256

units = 256

input_size = 4096

model = rnn_model_1(vocab_size, max_length, embedding_size, units, input_size)

Model: "model"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_2 (InputLayer) [(None, 34)] 0

__________________________________________________________________________________________________

input_1 (InputLayer) [(None, 4096)] 0

__________________________________________________________________________________________________

embedding (Embedding) (None, 34, 256) 1940224 input_2[0][0]

__________________________________________________________________________________________________

dropout (Dropout) (None, 4096) 0 input_1[0][0]

__________________________________________________________________________________________________

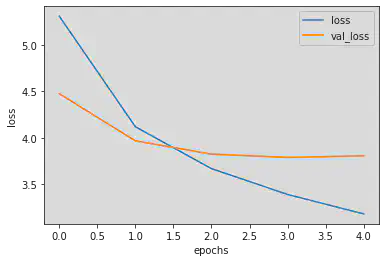

dropout_1 (Dropout) (None, 34, 256) 0 embedding[0][0]